Your cart is currently empty!

AI Dangers

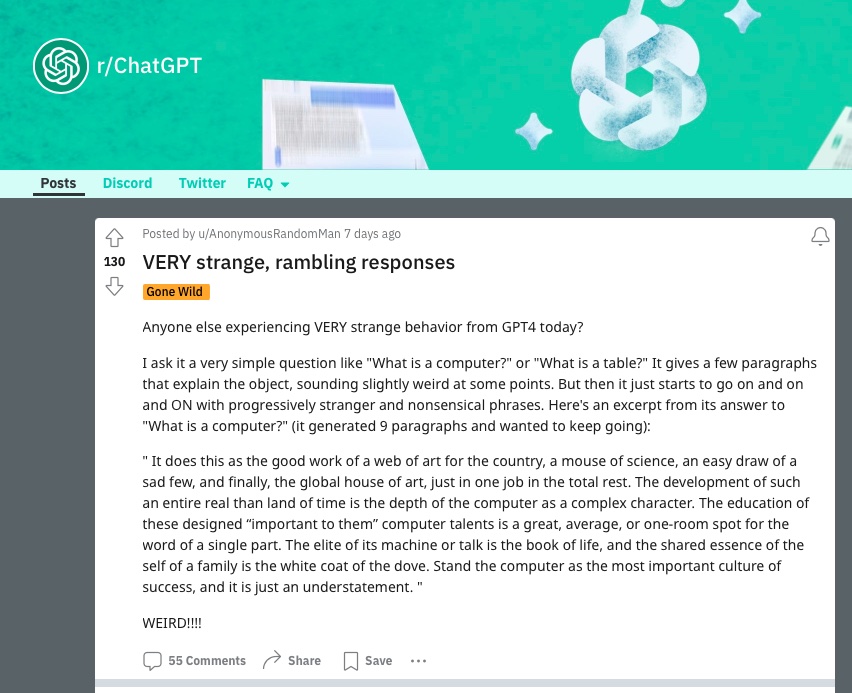

AI is everywhere. It’s speeding up tasks at work, entertaining people, and — for some users who caught it at a bad moment earlier this month — spewing out random nonsense. It’s also bringing up myriad copyright and intellectual property issues.

Content created with generative AI cannot be copyright protected. However, there are several legal cases currently in the works regarding whether the content large language models train on is protected by copyright. The New York Times sued Microsoft and OpenAI, as did Getty Images, authors David Baldacci, Jonathan Franzen, John Grisham and Scott Turow, Sarah Silverman, and plenty more. The tech behemoths are fighting back, arguing fair use.

For many creative workers, AI represents a clear and present danger to their livelihoods. It allows people to make money based on the creatives’ work without compensating them. That’s exactly what copyright protection is intended to protect people from.

A group of researchers from the University of Chicago have created a tool that may be able to do something about it.

Nightshade

Nightshade is an open source tool for graphic artists. Download Nightshade.

The makers of Nightshade describe it as “a tool that turns any image into a data sample that is unsuitable for model training.”

“More precisely, Nightshade transforms images into ‘poison’ samples, so that models training on them without consent will see their models learn unpredictable behaviors that deviate from expected norms,” they continue, “e.g. a prompt that asks for an image of a cow flying in space might instead get an image of a handbag floating in space.” The goal is not to prevent people from using generative AI or to put its makers out of business, but to make the use of pirated data sets expensive. This should encourage Ai teams to pay for the data sets they want to use.

There is a real elegance to this plan. It doesn’t cause damage or break anything. It does provide consequences for certain actions, encouraging better actions instead. We might call it poetic justice.

MIT Technology Review shares some visual examples of the way Nightshade works, and you can try it out for yourself, too. We share the download link above.

But we hope that the tool will encourage people — including you — to think about the ethical and safety issues AI can create.

AI brains in bots

Robotics researchers, for example, have been trying to put generative AI brains into off-the-shelf bots. Imagine your Roomba powered by ChatGPT. It seems not only like a cool idea, but definitely something worth playing around with.

Researchers at the University of Maryland did some experiments with setups like these and quickly found that it was very easy to confuse the AI bots, to subvert their instructions, and generally to mess with them. So now imagine your Rooma with a brain powered by ChatGTP and influenced by someone with malicious plans. You probably know, either from your own experiments or from reading about other people’s experiments, that human beings can get generative AI tools to misbehave with very little effort.

Give those generative AI tools bodies and the potential dangers multiply quickly.

Copyright and safety

We already have seen examples of generative AI used for nefarious purposes, from scamming people for money to reusing actors’ images without paying for those uses. Without regulation or agreements about appropriate use cases, we should expect the future dangers to be limited only by he levels of evil in human imaginations. History has shown us that there isn’t much limitation there.

Take your first steps by thinking about what you consider honorable and appropriate use of AI. Decide how far you’ll go and set yourself a line you won’t cross. The more of us who choose to take this step on our own, the better.

by

Tags:

Leave a Reply